Writing Finnegans Wake with a Recurrent Neural Net

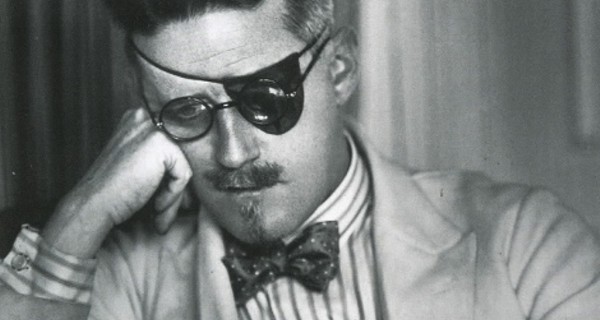

Last week Andrej Karpathy wrote a truly amazing post on Recurrent Neural Networks, a generative model of sequential data which can be used to automatically create text. Not only did he provide a wonderful explanation of the topic but also released his source code. Being that it was a holiday weekend I decided to enjoy my time off playing with this code and one of the most fascinating texts of the 20th Century: James Joyce's Finnegans Wake.

For those unfamiliar with the work, Finnegans Wake is one of the most complex and controversial books written. The text consists of over 600 pages of a relentlessly non-linear narratives consisting of stream-of-conscious style writing that is composed of many portmanteau words and odd onomatopoeia. There are many readers and scholars alike that consider the work entirely gibberish. At the same time, it has left an indelible impact on popular culture. For example both the blogging platform "Wordpress" and the fundamental particle "Quark" derive their names from the text of Finnegans Wake. The great playwright Samuel Beckett and many other literary masters have viewed the work as pure genius. Finnegans Wake hangs over literary history as a perpetual and troublesome question.

To gain some insight into this question I wanted to try Karpathy's RNN, train it on the text of Finnegans Wake, and see what the results looked like. RNNs are particularly well suited for learning a strange text like Finnegans Wake because they learn one character at a time, rather than whole words or "tokens". Most generative language models (such as Markov Chains) assume a consistent vocabulary in the language, a rule which Finnegans Wake refuses to play by. It is at least some credit to the book that the majority of Natural Language Processing techniques would be as baffled by the text as many readers. Below is a passage from the original text compared with the output of the RNN trained on the work itself:

Output from RNN:

Oracia not only soen for em. Praye in a bitary, like Seemeryth! My minnalnon, in his profish on. Soon from Nila! Well, Anna Mae, make, me hoven, I feel ox in Ir such of an Dona, the Oxentricies seen enough fatury bonefit!, Tooking, the worth and the cabbound marry, his fattime you boot, in jarque to the tare, that, this a man and in cottestian, which she starse to liet, a power or forey foot lips and reprobed you upon the lesh field thinaindus, place, lie and leave...

Excerpt from Joyce's Finnegans Wake:

The great fall of the offwall entailed at such short notice the pftjschute of Finnegan, erse solid man, that the humptyhillhead of humself prumptly sends an unquiring one well to the west in quest of his tumptytumtoes: and their upturnpikepointandplace is at the knock out in the park where oranges have been laid to rust upon the green since dev-linsfirst loved livvy.

What clashes here of wills gen wonts, oystrygods gaggin fishy-gods! Brékkek...

Towards an Answer to Finnegans Wake?

The results are definitely interesting, but how can this weekend hack actually provide any insight into this monumental work of literature? The reason is because RNNs, while amazing, do in fact produce gibberish. Very well structured, surprisingly readable, and almost magic gibberish. The question that RNNs allow us to start answering about Finnegans Wake is in the difference between the original and the simulation. If we cannot truly distinguish between Finnegans Wake and the output off an RNN, then, despite Joyce's best efforts, his work truly must be viewed as a literary curiosity with no more meaning than a Rorschach Test. However, if studying the two leads us to see a clear difference, then that difference itself is precisely what makes Joyce's text something other than nonsense.

In a way, this is the opposite of a Turing Test!

If you enjoyed this post please subscribe to keep up to date and follow @willkurt!