Random Variables and Expectation with Robots and Stuff!

Many issues in the practice of probability and statistics come from not spending enough time really understanding the basic tools we use when solving problems. Here I want to look at two concepts that are frequently misunderstood, in large part do to their terrible names. We'll start with Random Variables, which are neither random nor variables, then look at Expectation which tells us very little about what we expect from a Random Variable.

Deterministic Functions aka Random Variables

This blog tends to favor intuition over mathematical definitions, but there is a very important reason we use math. Our intuitions are often trained from a lifetime of experience, throwing that out in the name of 'objectivity' is foolishly excluding important information (we'll explore this in more detail in future posts on Bayesian priors). But intuition also likes to skips steps, making it very easy to make errors in our reasoning if we don't pull apart our intuition. To understand Random Variables we're going to start with a definition and see where our intuition can lead to misunderstanding.

Random Variable - A function that associates a real number with an event.

Why do we need Random Variables? Let's start with flipping a coin and find out. We'll use H to mean 'heads' and T for 'tails'. We flip our coin ten times and get:

THTHTHTTTT

We now have some great samples, but we have no way of calculating anything because arithmetic doesn't work well on letters. The obvious solution to this is make a simple rule for transforming these letters into numbers. Here's our rule:

If we see a 'T' we'll output 0, if we see 'H' we'll output 1.

Our original output is then transformed into:

0101010000

And this is exactly where people start to get confused. This sequence of 1s and 0s is the output of our Random Variable. The Random Variable is the thing that translates 'H' or 'T' into 1 or 0. But when we hear "Random Variable" it's very tempting to think "Oh this must work like a 'Random Number Generator' where each time I look at this variable it has a random value". To further add to the problem, this is how many introductory textbooks actually explain Random Variables. To clear this up we'll build an imaginary Random Number Generating Machine.

Our Random Number Generating Machine is awesome! You just turn it on and it spits out numbers randomly! But how does it work? Inside there are two main components. First is the Sampler.

The Sampler is a happy robot that finds nothing more pleasurable then flipping a coin over and over again and reporting the results. After the Sampler we have our Random Variable.

The Random Variable is just a machine that takes our rules for translating events into numbers and applies it to the output of the Sampler. Now we can open up our Random Number Generating Machine and see how it works.

Looking inside the machine it becomes clear that the Random Variable is a useful, but not particularly complicated machine. It's really just a little tool we use to transform the output from our Sampler into numbers we can do math with.

Probability and Random Variables

Random Variables are typically expressed using capital letters. When defined, Random Variables are passed an \(\omega\) (little omega) parameter which represents the event our Sampler is passing in. The mathematical definition of the Random Variable in our coin example looks like this:

$$X(\omega) =\begin{cases}1 & \text{if } \omega \text{ is Heads} \\ 0 & \text{if } \omega\text{ is Tails} \end{cases}$$

For our next examples we'll switch from a coin to this exciting spinner!

Wow!

We can see that for this spinner \( P(\text{yellow}) = 1/2\), \( P(\text{red}) = 1/4 \), \( P(\text{blue}) = 1/4 \). Next we define our random variable:

Just to drive the point home again, we can assign any numbers to these colors we want. Here is a slightly stranger Random Variable we could assign to the spinner:

Whether or not \(B\) is preferable to \(A\) depends a lot on the situtation. If landing on "yellow" causes you to lose 200 points in a game and "red" and "blue" cause a gain of 70,000 and 12 respectively, \(B\) is the more sensible Random Variable. Even though how we define a Random Variable is arbitrary, we need this map because we cannot do any arithmetic on "yellow". We can use our Random Variable to ask questions about the behavior of our Sampler. For example$$P(A=2) = 1/2$$

There's already two annoying things here. The first question you should ask is "Wait, I though \(A\) was just a function, what does it mean to take the probability of a function?" This is another reason Random Variables are misunderstood. Mathematical notation is very concise, which saves trees but causes headaches. When we define our Random Variable we identify what \(\omega\) is and from then on we assume that is the Sampler passing values to our Random Variable.

The second question is likely "Why not just say \(P(\text{yellow})\) if it means the same thing as \(P({A=2})\)? It saves us from building the machine in the first place!" The answer to this is that we can ask much more complicated questions!

Expectation: not really what you were expecting

Okay for this one stay away from your intuitions! Let's go straight to the definition:

The Expectation of a Random Variable is the sum of its values weighted by their probability.

For a concrete example let's calculate the Expected Values of our Random Variables \(A\) and \(B\).$$E(A) = 2\cdot 1/2 + 1\cdot 1/4 + 0\cdot 1/4 = 1 \frac{1}{4}$$ $$E(B) = -200\cdot 1/2 + 70,000\cdot 1/4 + 12\cdot 1/4 = 17403$$

We can see that in the general case two Random Variables defined over the same probability space can yield very different results. The word "expected" has little to do with the Expectation of a Random Variable. What does it mean to say you "expected" 17,403 from \(B\)? We can come up with specific contexts where this number is something we expect, but that is dependent on what we are talking about. Expectation is merely a single number, calculated as defined, that is a way to summarize the output of a Random Variable. Let's visualize it as another machine!

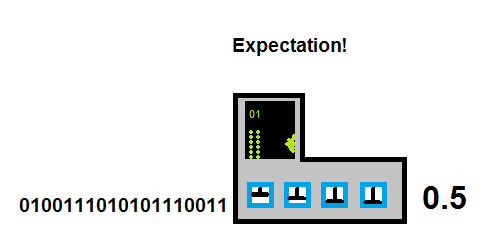

At this point it may seem a bit silly to build an Expectation machine, but in an upcoming post on Variance, Co-variance, and Correlation it will be extremely useful. Below is our entire assembly line for creating the Expectation for the Random Variable \(A\) based on the spinner.

Wait a second, isn't Expectation just...

YES! Okay expectation is just the mean of a Random Variable! But words like mean and average carry a special intuition that only applies to special cases of Random Variables over some distributions. This is exactly the situation where intuition gets you in trouble. When you apply the same intuitions for the mean of a Normal distribution as you do a bimodal one you start making mistakes. There are also plenty of real world cases where Expectation is actually what you expect, but again this is not universal. Expectation is a machine that squashes output from a Random Variable into a single number.

Next week's posts will continue with a discussion on Variance, Co-Variance and Correlation, which are much more appropriately named.

If you enjoyed this post please subscribe to keep up to date!